Just one year ago, AI was not the kind of thing most families talked about over dinner, but today parents are wondering how to talk to their kids about the use of artificial intelligence tools for schoolwork.

Whether you’re concerned about AI like I am, excited by the possibilities, or still trying to figure out where you stand, it’s important that we start having conversations with our kids about the ethics around AI.

When I asked my daughter last year about what she knew about AI programs and how kids in her high school were using ChatGPT–the fastest growing app of all time now with over 100mm users–,she admitted a few students had used it to “write” essays that they then handed in. That’s not good. She also mentioned that she had used it to generate a list of possible AP US Government exam questions to help her study. I thought that was pretty creative!

(Remind me to follow ups with another article on positive ways kids can use AI to support their learning.)

As with social media, AI is here, it’s not going away, and our kids are definitely going to figure out their own ways of using it. So we need to start having a new tech talk with them: The AI talk.

I know it can be intimidating to talk about if you don’t know much about AI, so I’ve been looking into the ways teachers and educators at all levels are starting to create rules around its use. As it turns out, only 10% of schools and universities around the globe have established AI rules, according to a recent UNESCO survey, though no doubt that number will increase in the coming month.

Well leave it to a former blogger, current parent, and Wheaton College professor, my friend C.C. Chapman, who wrote a wonderfully thoughtful set of rules around use of AI in his classroom that really helped me out.

Related: Dr. Seema Yasmin on how to raise media-savvy kids and help them BS-proof their brains.

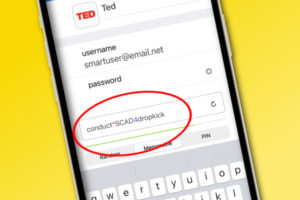

C.C. explained that last semester, he added just this one line about AI use to his syllabi:

All work must be original for this course and created by the student. Using AI-generated text is unacceptable and is viewed as an honor code violation.

Seems reasonable to me. Until you consider how much more there is to AI than generating a report or an answer to an essay question. What about using Midjourney or Dall-E2 to make your own images? What about using Soundraw to create your own free “stock” music for use in a Keynote presentation? Or using Looka to design a logo and brand identity for a marketing class? The tools are numerous and growing by the day, right along with the ethical dilemmas springing up with each new addition.

C.C. thought about these things too. So this year, with a little research and support, he created a new set of rules about AI in educational settings that starts with this:

All work turned in for grading is assumed to be original for this course by the student unless otherwise identified and cited. If any generative AI tools (ChatGPT, Bard, Dall-E, Midjourny, etc.) are used, you must adequately cite them following the APA 7 standards. Failure to do so will be viewed as a Wheaton Honor Code violation.

Thinking of AI tools as sources that require citing? So smart. But the next sentence is the one that I think is particularly helpful for parents looking for ways to talk about AI with our kids:

Remember that AI tools are excellent for brainstorming and getting started, but they often return false information or “hallucinations,” so they should never be relied on without verification when doing research.

I think that’s an excellent set of words to borrow if you’re a parent, caregiver, or educator yourself. Simple, honest and direct, with a clear message that there are pros and cons to AI tools–whether or not we understand them all ourselves yet.

Now that’s a conversation starter.

Photo: Mohamed Nohassi on Unsplash (See? Crediting. It’s not hard!)